Author Contributions

Conceptualization, B.B., R.P. and S.S.; methodology, R.P., S.S., R.F.-B. and G.G.-M.; software, B.B. and S.S.; validation, R.P., S.S., R.F.-B. and G.G.-M.; investigation, B.B., R.P., S.S., R.F.-B., G.G.-M. and J.M.M.-M.; resources, R.P. and S.S.; data curation, B.B., R.P. and S.S.; writing—original draft preparation, B.B. and S.S.; writing—review and editing, R.P., S.S. and G.G.-M.; visualization, R.F-B.; supervision, R.F.-B., G.G.-M. and J.M.M.-M. All authors have read and agreed to the published version of the manuscript.

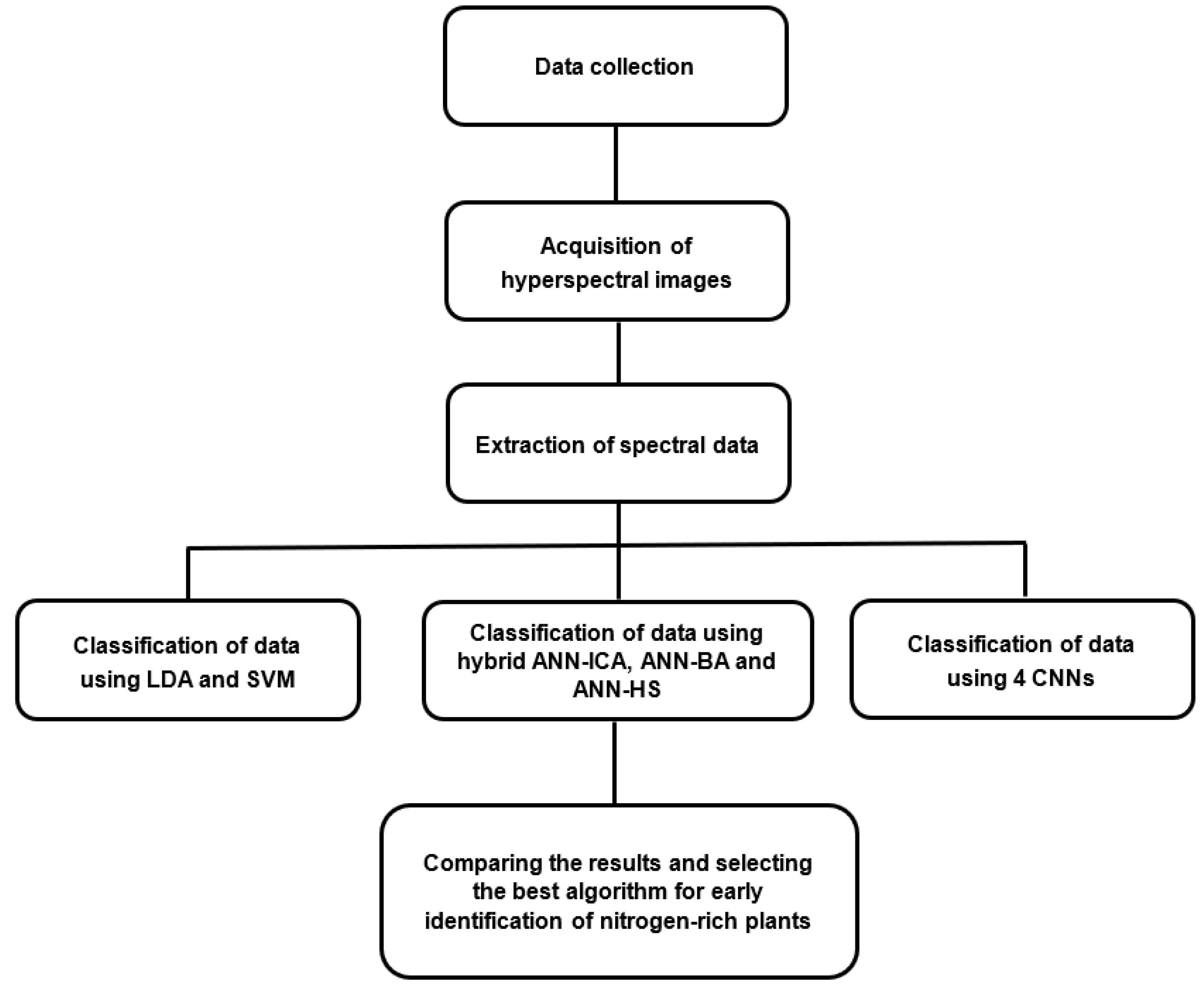

Figure 1.

System description showing the different stages in early identification of nitrogen-rich tomato plants by hyperspectral imaging.

Figure 1.

System description showing the different stages in early identification of nitrogen-rich tomato plants by hyperspectral imaging.

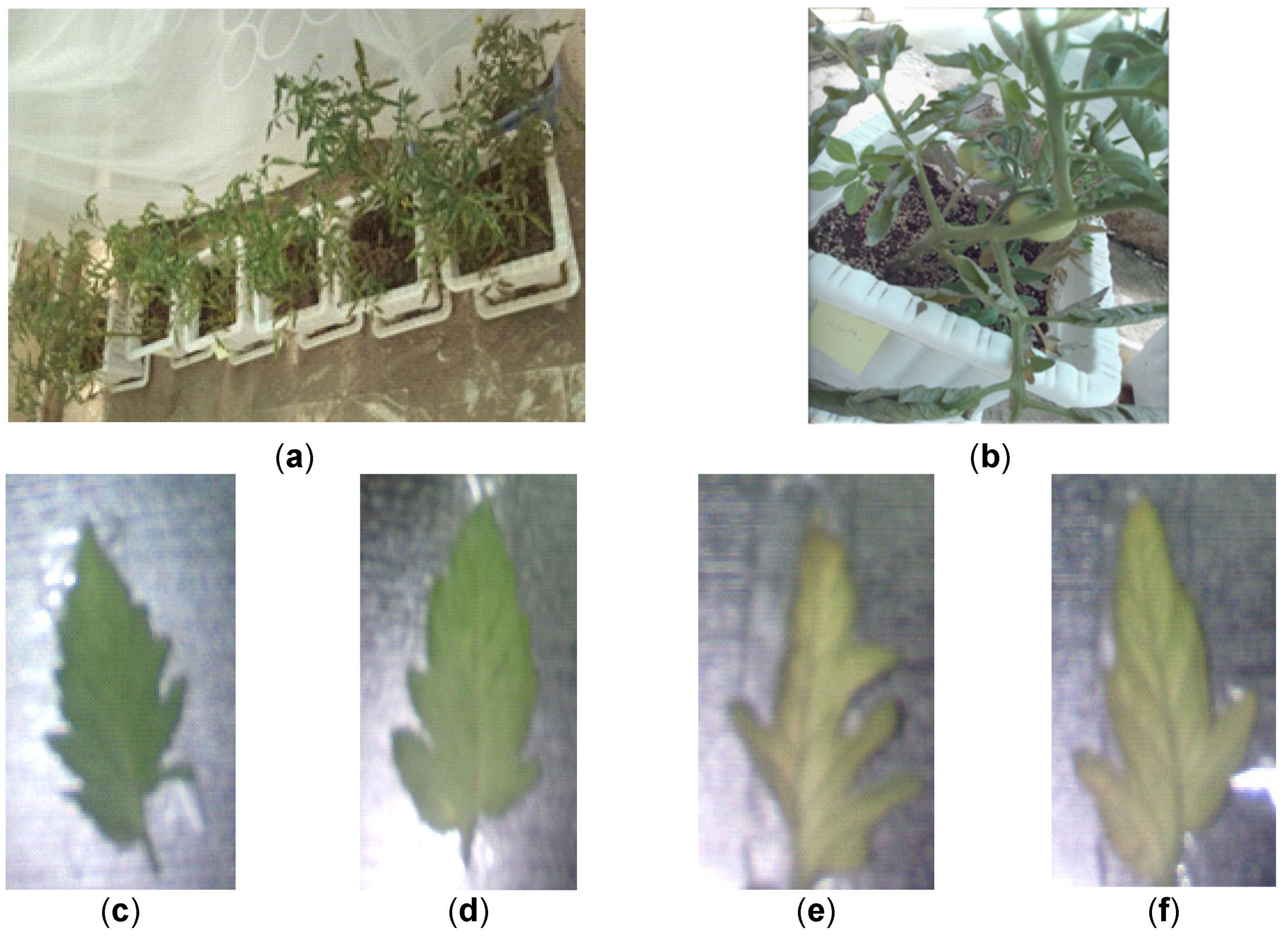

Figure 2.

Some examples of RGB images of the tomato plants prepared for hyperspectral imaging. (a,b) Sample views of the tomato pots. (c–f) Sample tomato leaves after 0 (class A), 1 (class B), 2 (class C) and 3 (class D) days in nitrogen overdose treatment, respectively.

Figure 2.

Some examples of RGB images of the tomato plants prepared for hyperspectral imaging. (a,b) Sample views of the tomato pots. (c–f) Sample tomato leaves after 0 (class A), 1 (class B), 2 (class C) and 3 (class D) days in nitrogen overdose treatment, respectively.

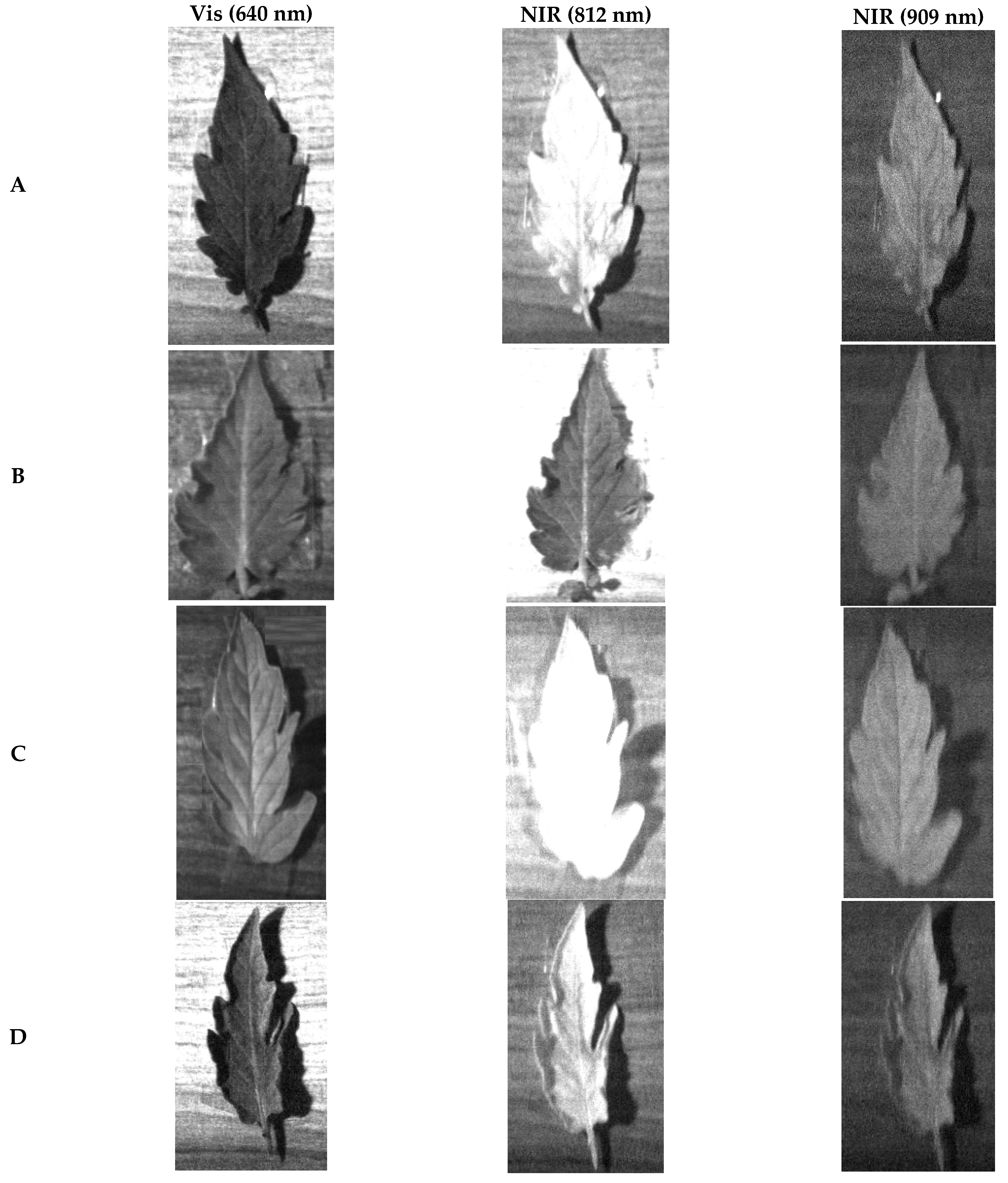

Figure 3.

Images of some tomato leaves captured using hyperspectral imaging on days (A–D), at different wavelengths.

Figure 3.

Images of some tomato leaves captured using hyperspectral imaging on days (A–D), at different wavelengths.

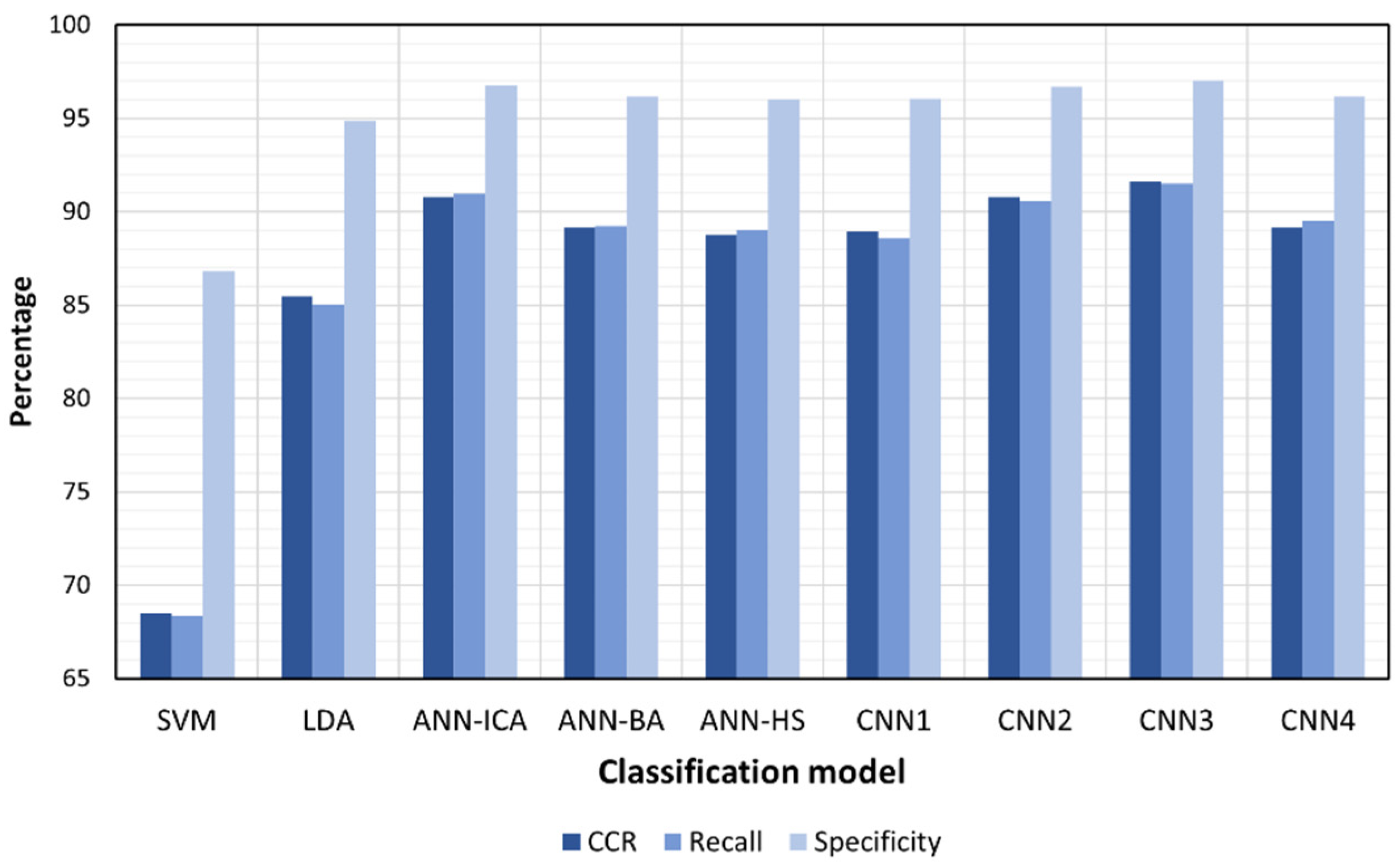

Figure 4.

Comparison of the correct classification rate (CCR), and the average recall and specificity for the four classes obtained by the nine methods for nitrogen treatment classification.

Figure 4.

Comparison of the correct classification rate (CCR), and the average recall and specificity for the four classes obtained by the nine methods for nitrogen treatment classification.

Table 1.

Optimal configuration of the neural network parameters set by ANN-HS. Satlins: symmetric saturating linear transfer function. Radbas: radial basis transfer function. Softmax: softmax transfer function. Trainlm: Levenberg–Marquardt backpropagation. Learnwh: Widrow–Hoff weight/bias learning function.

Table 1.

Optimal configuration of the neural network parameters set by ANN-HS. Satlins: symmetric saturating linear transfer function. Radbas: radial basis transfer function. Softmax: softmax transfer function. Trainlm: Levenberg–Marquardt backpropagation. Learnwh: Widrow–Hoff weight/bias learning function.

| Description | Optimal Values |

|---|

| Number of hidden layers | 3 |

| Number of neurons per layer | 1st layer: 9; 2nd layer: 17; 3rd layer: 23 |

| Transfer functions per layer | Satlins, radbas, softmax |

| Backpropagation network training function | Trainlm |

| Weight/bias learning function | Learnwh |

Table 2.

Optimal configuration of the neural network parameters set by ANN-BA. Purelin: linear transfer function. Tansig: hyperbolic tangent sigmoid transfer function. Logsig: log-sigmoid transfer function. Trainscg: scaled conjugate gradient backpropagation. Learnk: Kohonen weight learning function.

Table 2.

Optimal configuration of the neural network parameters set by ANN-BA. Purelin: linear transfer function. Tansig: hyperbolic tangent sigmoid transfer function. Logsig: log-sigmoid transfer function. Trainscg: scaled conjugate gradient backpropagation. Learnk: Kohonen weight learning function.

| Description | Optimal Values |

|---|

| Number of hidden layers | 3 |

| Number of neurons per layer | 1st layer: 13; 2nd layer: 21; 3rd layer: 25 |

| Transfer functions per layer | Purelin, tansig, logsig |

| Backpropagation network training function | Trainscg |

| Weight/bias learning function | Learnk |

Table 3.

Optimal configuration of the neural network parameters set by ANN-ICA. Radbas: radial basis transfer function. Tansig: hyperbolic tangent sigmoid transfer function. Trainrp: resilient backpropagation. Learngd: gradient descent weight and bias learning function.

Table 3.

Optimal configuration of the neural network parameters set by ANN-ICA. Radbas: radial basis transfer function. Tansig: hyperbolic tangent sigmoid transfer function. Trainrp: resilient backpropagation. Learngd: gradient descent weight and bias learning function.

| Description | Optimal Values |

|---|

| Number of hidden layers | 2 |

| Number of neurons per layer | 1st layer: 19; 2nd layer: 12 |

| Transfer functions per layer | Radbas, tansig |

| Backpropagation network training function | Trainrp |

| Weight/bias learning function | Learngd |

Table 4.

Description of the first convolutional neural network (CNN1) for the classification of the nitrogen excess class in tomato leaves.

Table 4.

Description of the first convolutional neural network (CNN1) for the classification of the nitrogen excess class in tomato leaves.

| Layer (Type) | Output Shape | Number of Parameters |

|---|

| conv1d_1 (Conv1D) | (316, 32) | 256 |

| max_pooling1d (MaxPooling) | (158, 32) | 0 |

| conv1d_2 (Conv1D) | (156, 64) | 6208 |

| max_pooling1d_1 (MaxPooling) | (78, 64) | 0 |

| conv1d_3 (Conv1D) | (76, 128) | 24,704 |

| flatten (Flatten) | (9728) | 0 |

| dense (Dense) | (4) | 38,916 |

Table 5.

Description of the second convolutional neural network (CNN2) for the classification of the nitrogen excess class in tomato leaves.

Table 5.

Description of the second convolutional neural network (CNN2) for the classification of the nitrogen excess class in tomato leaves.

| Layer (Type) | Output Shape | Number of Parameters |

|---|

| conv1d_1 (Conv1D) | (316, 32) | 256 |

| max_pooling1d_1 (MaxPooling) | (158, 32) | 0 |

| conv1d_2 (Conv1D) | (154, 64) | 10,304 |

| max_pooling1d_2 (MaxPooling) | (77, 64) | 0 |

| conv1d_3 (Conv1D) | (73, 128) | 41,088 |

| max_pooling1d_3 (MaxPooling) | (36, 128) | 0 |

| conv1d_4 (Conv1D) | (34, 256) | 98,560 |

| max_pooling1d_4 (MaxPooling) | (17, 256) | 0 |

| conv1d_5 (Conv1D) | (15, 512) | 393,728 |

| flatten (Flatten) | (7680) | 0 |

| dense (Dense) | (4) | 30,724 |

Table 6.

Description of the third convolutional neural network (CNN3) for the classification of the nitrogen excess class in tomato leaves.

Table 6.

Description of the third convolutional neural network (CNN3) for the classification of the nitrogen excess class in tomato leaves.

| Layer (Type) | Output Shape | Number of Parameters |

|---|

| conv1d_1 (Conv1D) | (316, 64) | 512 |

| max_pooling1d_1 (MaxPooling) | (158, 64) | 0 |

| conv1d_2 (Conv1D) | (154, 128) | 41,088 |

| conv1d_3 (Conv1D) | (150, 128) | 82,048 |

| max_pooling1d_1 (MaxPooling) | (75, 128) | 0 |

| conv1d_4(Conv1D) | (71, 256) | 164,096 |

| max_pooling1d_2 (MaxPooling) | (35, 256) | 0 |

| conv1d_5 (Conv1D) | (31, 256) | 327,936 |

| max_pooling1d_3 (MaxPooling) | (15, 256) | 0 |

| conv1d_6 (Conv1D) | (13, 512) | 393,728 |

| flatten_10 (Flatten) | (6656) | 0 |

| dense_10 (Dense) | (4) | 26,628 |

Table 7.

Description of the fourth convolutional neural network (CNN4) for the classification of the nitrogen excess class in tomato leaves.

Table 7.

Description of the fourth convolutional neural network (CNN4) for the classification of the nitrogen excess class in tomato leaves.

| Layer (Type) | Output Shape | Number of Parameters |

|---|

| conv1d_1 (Conv1D) | (316, 64) | 512 |

| max_pooling1d_1 (MaxPooling) | (158, 64) | 0 |

| conv1d_2 (Conv1D) | (154, 128) | 41,088 |

| conv1d_3 (Conv1D) | (150, 128) | 82,048 |

| max_pooling1d_1 (MaxPooling) | (75, 128) | 0 |

| conv1d_4 (Conv1D) | (71, 256) | 164,096 |

| conv1d_5 (Conv1D) | (67, 256) | 327,936 |

| max_pooling1d_2 (MaxPooling) | (33, 256) | 0 |

| conv1d_6 (Conv1D) | (31, 512) | 393,728 |

| max_pooling1d_3 (MaxPooling) | (15, 512) | 0 |

| conv1d_7 (Conv1D) | (13, 512) | 786,944 |

| flatten (Flatten) | (6656) | 0 |

| dense (Dense) | (4) | 26,628 |

Table 8.

SVM classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

Table 8.

SVM classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

| Class | A | B | C | D | Total Data | Misclassified (%) | AUC | CCR (%) |

|---|

| A | 59 | 7 | 37 | 9 | 112 | 89.83 | 0.719 | 68.50 |

| B | 1 | 114 | 5 | 1 | 121 | 6.14 | 0.919 |

| C | 20 | 22 | 88 | 9 | 139 | 57.95 | 0.725 |

| D | 12 | 9 | 22 | 74 | 117 | 58.11 | 0.790 |

Table 9.

Performance evaluation of SVM classifier for classes A, B, C and D using different criteria.

Table 9.

Performance evaluation of SVM classifier for classes A, B, C and D using different criteria.

| Class | Recall | Accuracy | Specificity | FP-Rate | Precision | F-Score |

|---|

| A | 52.67 | 79.57 | 89.32 | 10.67 | 64.13 | 57.84 |

| B | 94.21 | 88.15 | 85.32 | 14.67 | 75 | 83.51 |

| C | 63.30 | 74.44 | 79.42 | 20.57 | 57.89 | 60.48 |

| D | 63.24 | 84.38 | 93.21 | 6.79 | 79.56 | 70.47 |

Table 10.

LDA classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

Table 10.

LDA classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

| Class | A | B | C | D | Total Data | Misclassified (%) | AUC | CCR (%) |

|---|

| A | 74 | 0 | 34 | 4 | 112 | 51.35 | 0.920 | 85.48 |

| B | 1 | 112 | 8 | 0 | 121 | 8.04 | 0.995 |

| C | 15 | 0 | 124 | 0 | 139 | 12.10 | 0.942 |

| D | 5 | 0 | 4 | 108 | 117 | 8.33 | 0.977 |

Table 11.

Performance evaluation of LDA classifier for classes A, B, C and D using different criteria.

Table 11.

Performance evaluation of LDA classifier for classes A, B, C and D using different criteria.

| Class | Recall | Accuracy | Specificity | FP-Rate | Precision | F-Score |

|---|

| A | 66.07 | 87.63 | 94.24 | 5.75 | 77.89 | 71.49 |

| B | 92.56 | 97.89 | 100 | 0 | 100 | 96.13 |

| C | 89.20 | 87.26 | 86.47 | 13.52 | 72.94 | 80.25 |

| D | 92.30 | 96.98 | 98.72 | 1.27 | 96.42 | 94.32 |

Table 12.

ANN-ICA classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

Table 12.

ANN-ICA classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

| Class | A | B | C | D | Total Data | Misclassified (%) | AUC | CCR (%) |

|---|

| A | 98 | 0 | 13 | 1 | 112 | 12.50 | 0.966 | 90.79 |

| B | 3 | 113 | 5 | 0 | 121 | 6.61 | 0.992 |

| C | 14 | 6 | 119 | 0 | 139 | 14.39 | 0.972 |

| D | 1 | 2 | 0 | 114 | 117 | 2.56 | 0.999 |

Table 13.

Performance evaluation of ANN-ICA classifier for classes A, B, C and D using different criteria.

Table 13.

Performance evaluation of ANN-ICA classifier for classes A, B, C and D using different criteria.

| Class | Recall | Accuracy | Specificity | FP-Rate | Precision | F-Score |

|---|

| A | 87.50 | 93.27 | 95.05 | 4.94 | 84.48 | 85.96 |

| B | 93.38 | 96.52 | 97.64 | 2.35 | 93.38 | 93.38 |

| C | 85.61 | 92.11 | 94.75 | 5.24 | 86.86 | 86.23 |

| D | 97.43 | 99.10 | 99.69 | 0.30 | 99.13 | 98.27 |

Table 14.

ANN-BA classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

Table 14.

ANN-BA classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

| Classes | A | B | C | D | Total Data | Misclassified (%) | AUC | CCR (%) |

|---|

| A | 91 | 0 | 20 | 1 | 112 | 23.08 | 0.959 | 89.16 |

| B | 0 | 117 | 4 | 0 | 121 | 3.42 | 0.994 |

| C | 14 | 8 | 117 | 0 | 139 | 18.80 | 0.963 |

| D | 1 | 5 | 0 | 111 | 117 | 5.41 | 0.999 |

Table 15.

Performance evaluation of ANN-BA classifier for classes A, B, C and D using different criteria.

Table 15.

Performance evaluation of ANN-BA classifier for classes A, B, C and D using different criteria.

| Class | Recall | Accuracy | Specificity | FP-Rate | Precision | F-Score |

|---|

| A | 81.25 | 92.37 | 95.83 | 4.16 | 85.84 | 83.40 |

| B | 96.69 | 96.24 | 96.08 | 3.92 | 90.00 | 93.22 |

| C | 84.17 | 90.45 | 93.00 | 6.99 | 82.97 | 83.57 |

| D | 94.87 | 98.41 | 99.69 | 0.30 | 99.10 | 96.94 |

Table 16.

ANN-HS classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

Table 16.

ANN-HS classifier performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

| Class | A | B | C | D | Total Data | Misclassified (%) | AUC | CCR (%) |

|---|

| A | 94 | 1 | 16 | 1 | 112 | 19.15 | 0.974 | 88.75 |

| B | 1 | 112 | 7 | 1 | 121 | 8.04 | 0.992 |

| C | 15 | 11 | 113 | 0 | 139 | 23.01 | 0.950 |

| D | 1 | 0 | 1 | 115 | 117 | 1.74 | 0.999 |

Table 17.

Performance evaluation of ANN-HS classifier for classes A, B, C and D using different criteria.

Table 17.

Performance evaluation of ANN-HS classifier for classes A, B, C and D using different criteria.

| Class | Recall | Accuracy | Specificity | FP-Rate | Precision | F-Score |

|---|

| A | 83.92 | 92.53 | 95.23 | 4.76 | 84.68 | 84.30 |

| B | 92.56 | 95.38 | 96.40 | 3.59 | 90.32 | 91.42 |

| C | 81.29 | 89.66 | 93.04 | 6.95 | 82.48 | 81.88 |

| D | 98.29 | 99.08 | 99.37 | 0.62 | 98.29 | 98.29 |

Table 18.

CNN classifiers performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

Table 18.

CNN classifiers performance using the confusion matrix, the classification error per class, the area under the ROC curve (AUC) and the correct classification rate (CCR).

| Structure | Class | A | B | C | D | Total Data | Misclassified

(%) | AUC | CCR

(%) |

|---|

| | A | 82 | 4 | 26 | 0 | 112 | 36.59 | 0.965 | |

| 1 | B | 1 | 115 | 5 | 0 | 121 | 5.22 | 0.994 | 88.95 |

| | C | 6 | 5 | 128 | 0 | 139 | 8.59 | 0.969 | |

| | D | 2 | 2 | 3 | 110 | 117 | 6.36 | 0.999 | |

| | A | 87 | 9 | 15 | 1 | 112 | 28.74 | 0.985 | |

| 2 | B | 0 | 117 | 4 | 0 | 121 | 3.42 | 0.982 | 90.79 |

| | C | 3 | 9 | 127 | 0 | 139 | 9.45 | 0.981 | |

| | D | 1 | 2 | 1 | 113 | 117 | 3.54 | 0.999 | |

| | A | 96 | 4 | 12 | 0 | 112 | 16.70 | 0.981 | |

| 3 | B | 0 | 118 | 3 | 0 | 121 | 2.54 | 0.989 | 91.61 |

| | C | 6 | 6 | 127 | 0 | 139 | 9.45 | 0.977 | |

| | D | 1 | 5 | 4 | 107 | 117 | 9.35 | 0.995 | |

| | A | 100 | 3 | 6 | 3 | 112 | 12.00 | 0.985 | |

| 4 | B | 3 | 109 | 9 | 0 | 121 | 11.01 | 0.992 | 89.16 |

| | C | 21 | 5 | 113 | 0 | 139 | 23.01 | 0.978 | |

| | D | 0 | 1 | 2 | 114 | 117 | 2.63 | 0.996 | |

Table 19.

Performance evaluation of the four CNN classifiers for classes A, B, C and D using different criteria.

Table 19.

Performance evaluation of the four CNN classifiers for classes A, B, C and D using different criteria.

| Structure | Class | Recall | Accuracy | Specificity | FP-Rate | Precision | F-Score |

|---|

| | A | 73.21 | 91.77 | 97.51 | 2.48 | 90.10 | 80.78 |

| 1 | B | 95.04 | 96.23 | 96.67 | 3.32 | 91.26 | 93.11 |

| | C | 92.08 | 90.62 | 90.02 | 9.97 | 79.01 | 85.04 |

| | D | 94.01 | 98.41 | 100 | 0 | 100 | 96.91 |

| | A | 77.67 | 93.86 | 98.89 | 1.10 | 95.604 | 85.71 |

| 2 | B | 96.69 | 94.87 | 94.23 | 5.76 | 85.40 | 90.69 |

| | C | 91.36 | 93.27 | 94.06 | 5.93 | 86.39 | 88.81 |

| | D | 96.58 | 98.88 | 99.69 | 0.30 | 99.12 | 97.83 |

| | A | 85.71 | 95.11 | 98.05 | 1.94 | 93.20 | 89.30 |

| 3 | B | 97.52 | 96.13 | 95.65 | 4.34 | 88.72 | 92.91 |

| | C | 91.36 | 93.52 | 94.41 | 5.58 | 86.98 | 89.12 |

| | D | 91.45 | 97.81 | 100 | 0 | 100 | 95.53 |

| | A | 89.28 | 92.37 | 93.33 | 6.60 | 80.64 | 84.74 |

| 4 | B | 90.08 | 95.40 | 97.32 | 2.67 | 92.37 | 91.21 |

| | C | 81.29 | 91.02 | 95.00 | 5.00 | 86.92 | 84.01 |

| | D | 97.43 | 98.64 | 99.07 | 0.90 | 97.43 | 97.43 |